These days, the term A/B testing is thrown around a lot, and often in the context of, “sure we want to do it… eventually.” Awareness around A/B testing has risen exponentially in recent years due in part to success stories from peers and the efforts of industry-leading testing platforms. Motivation to set a testing program in motion, however, continues to lack for the majority of organizations, presumably because the true nature of A/B testing is unclear and the benefits uncertain. Let’s dig deeper into each of those concerns one by one.

What is A/B Testing?

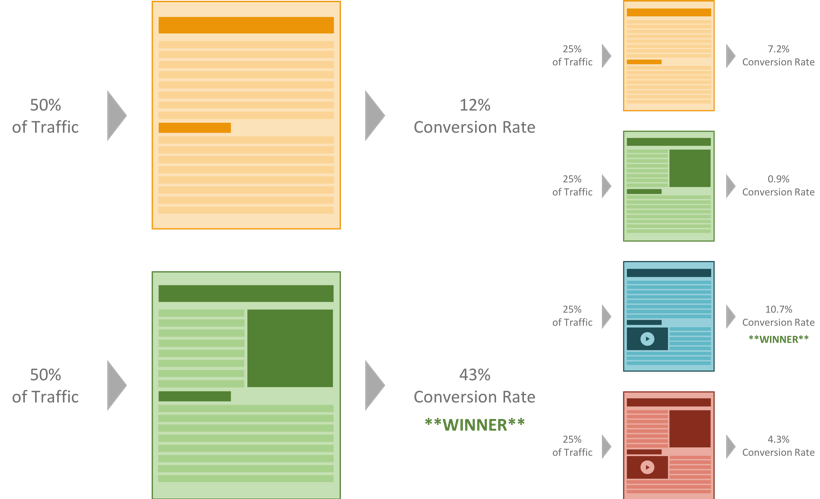

Sometimes also referred to as split testing, an A/B test is an experiment that compares the “as-is” version of a website/product (the control) against a proposed “to-be” version (the variant). The control and the variant are almost identical, with just a single element changed. As traffic comes in, each visitor/user is randomly assigned which version they will be presented.

It is, however, also possible to change multiple elements in a single multivariate test (also called an A/B/n test). The primary benefits of A/B/n are that multiple changes, or more complex solutions, can be tested. The downside of A/B/n is that more changes result in greater uncertainty, which requires a larger volume of tested users to reach statistical confidence.

Why Should You Care?

As digital professionals, we make a lot of assumptions based on the latest industry trends and supposed best practices. We push out messaging or design a product the way we assume is best and then wait to see how it performs. With A/B testing, however, we’re able to make much more informed decisions.

The volume of visitors (users) you have at the top of the funnel, for example, is useless unless they actually convert (use) your product. The goal of A/B testing is not simply to see which version wins (e.g., if a red button or blue button gets clicked more), but rather to observe users’ behaviors to see which version increases conversion/usage rates. Running tests enables you to eliminate guesswork and make data-driven decisions, which reduces acquisition costs and increases ROI over the long-term.

What Can You Test?

With the advancement of modern A/B testing solutions, there are actually very few limitations on what can be tested these days. Traditionally, when people think of A/B testing they imagine changing the wording of a call-to-action (CTA) or trying a new version of a marketing email, but the reality is that pretty much every element of your website or product is fair game.

Common examples include the text in headlines/body copy and the appearance, language, and positioning of CTAs. More advanced tests can include changes to your site’s menu structure and navigation, or even the design of specific elements (typography, color scheme, etc.). And of course, in today’s mobile-first culture it’s critical to make sure your product is optimized on all devices. But don’t limit yourself to just trying out mobile-specific variants of desktop products; today’s platforms are fully capable of running tests on mobile native applications.

Planning Your First Test

So, you’re excited to begin A/B testing but don’t know where to start, right? First things first though – before you even begin building out your variants, you need to make sure you know the right thing to test.

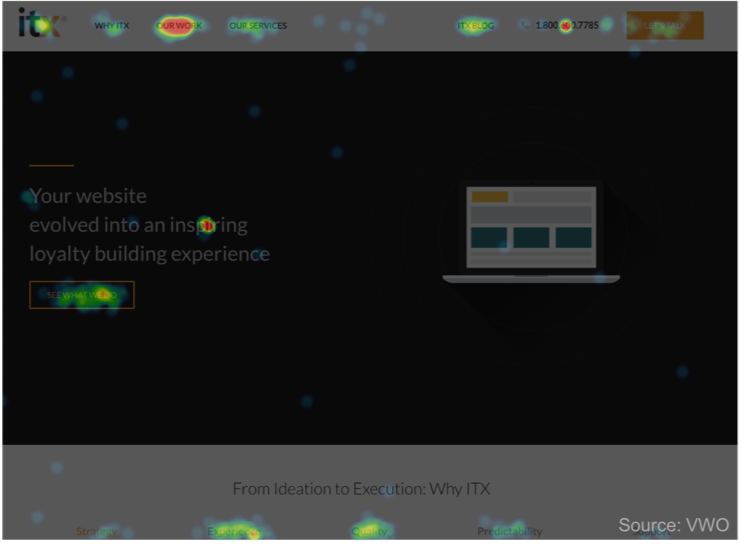

Step 1: Collect Data. It’s imperative that you first understand your baseline. Do you know where your users are having the most trouble or are behaving in a way you didn’t expect? If you’re not already, you should be gathering a couple of different types of data to fully understand your users. Acquisition/navigation data helps you understand where your users are coming from, how they are progressing through your product, and where you are losing them. A variety of web analytics platforms can achieve this level of understanding (Google Analytics being one of the most common). Once you know how you’re getting your users, you should be examining their on-page behavior. How long are your users spending on a particular page, how far down that page are they seeing, and what are they clicking on? Heatmapping tools, such as CrazyEgg, create visually stunning ways to answer these questions. Finally, collect direct feedback to ask your users why they are on your site, what they find difficult about it, and what problems you can help them solve.

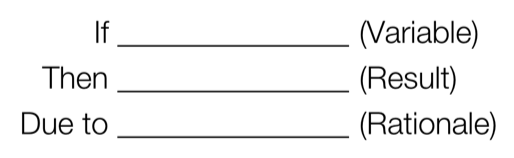

Step 2: Generate Hypotheses. Simply knowing the areas of improvement in your product and having an idea of what to test aren’t enough. Generating a hypothesis for your test will help declare any assumptions, focus your scope, and set the expected outcome. To build your hypothesis, first state clearly the problem you’re trying to solve and what metric you’ll track to measure success. A high-quality hypothesis should follow the common structure:

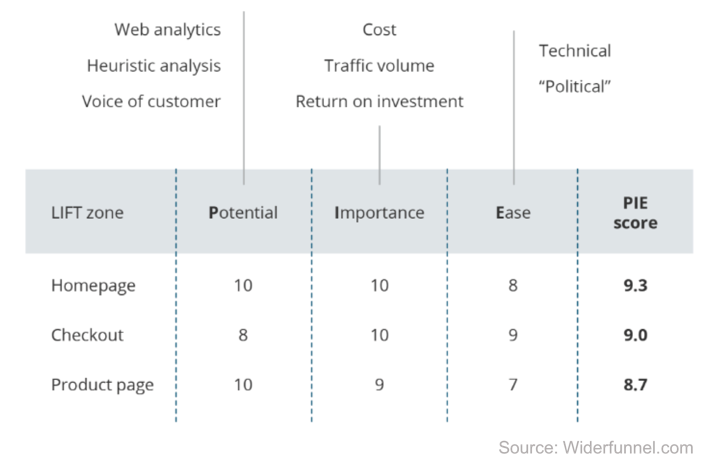

Step 3: Prioritize Ideas. Once you start looking at the data and generating hypotheses, you’ll likely find you have more ideas than you can test at once. It’s important to prioritize your tests to make sure you’re achieving successful test results as quickly as possible and begin to see value. Using criteria, such as Chris Goward’s PIE Framework (which focuses on the Potential, Importance, and Ease of a prospective test), is an elegant and effective way to sort through your ideas. By looking at each test’s potential, we can identify the largest opportunity for improvement, which typically exists on the worst performing pages. Tests with high potential have the biggest opportunity to move the needle. Of course, there are also areas of your product that are more important to the business, such as key pages with the most traffic. Tests with high importance focus on areas at the biggest risk if performing poorly. Finally, A/B testing isn’t just about moving mountains. By focusing on the relative ease of implementing a test, we can identify which ones will be the quickest to get up and running, and thus the quickest to deliver results.

Executing Your Test

Now that you know exactly what test you want to run, all that’s left is building it and getting it out into the world. Every testing platform, including two of the industry leaders VWO and Optimizely, has their own set of best practices around the technical implementation of tests. What’s most important once your test is running is to have patience. Remember the old adage that a watched pot never boils. Once you publish your first test and it’s being delivered to your production users, you have the ability to check in and see real-time results, but it’s best to wait until statistical significance is reached before drawing conclusions and declaring a winner. Use this time instead to plan subsequent tests and drive other Continuous Innovation improvements to your site or product.

However, a couple of considerations that apply across platforms are test duration and audience targeting. When it comes to test duration there is no magic number. The length of time you should expect to have your test running is primarily dependent on the amount of traffic it’s receiving but also depends on how much complexity (how many variants) your test has. There are a variety of free calculators you can use to estimate how long it will take to reach statistical significance based on sample size and test duration. Additionally, in certain situations, it makes sense to only present your variant to a certain subset of your users. Audience targeting can occur by a variety of segmentation factors, such as geographic location, user type, device type, etc. Keep in mind though that segmenting your audience will decrease the number of visitors included in the test, which will increase the required test duration before statistical significance can be reached.

Analyzing the Results

Congratulations, once your test has run its course it’s time to look at the results and declare a winner. Did you achieve your expected outcome (primary metric)? If yes… great! Now let’s build off of that success in subsequent tests. If no… still great! Since you set up a controlled experiment, you can still learn from an unverified hypothesis to drive decisions moving forward.

There are some considerations to keep in mind while interpreting your results though:

- The higher the statistical significance, the more confidence we can have in the results.

- This is a data point, not the final answer. Your hypothesis might actually be correct, but the test was implemented poorly.

- Drill deeper into your results. Even if your hypothesis failed overall, it could have succeeded with certain segments.

Driving Adoption

A/B testing isn’t an overnight project. While it can be tempting to look for justification right away that your A/B testing program is driving value, remember that you’re running experiments that require time to gather meaningful insights. There are, however, a couple of ways to help drive adoption and support for your program. First, go after the “low hanging fruit” to show progress and get some quick wins. It also helps to gather feedback from your broader team on what they think the biggest problems are to tackle. Finally, devote time to revisiting your assumptions and generating new ideas on a regular basis.

References:

vwo.com. The Complete Guide to A/B Testing. https://vwo.com/ab-testing/

Optimizely.com. Multivariate Testing. https://www.optimizely.com/optimization-glossary/multivariate-testing/

Matt Broedel (2018, January 30). The Benefits of User Testing: Why it Should Be Implemented in Every Product.

Steve Olenski (2017, January 9). How Marketers Can Reduce Customer Acquisition Cost. https://www.forbes.com/sites/steveolenski/2017/01/09/how-marketers-can-reduce-customer-acquisition-cost/#7913cfdd4063

Robin Johnson (2013, April 30). 71 A/B Testing Ideas. https://blog.optimizely.com/2013/04/30/71-things-to-ab-test/

Vwo.com. How to Create Custom Segments for Mobile App Testing. https://vwo.com/knowledge/how-to-create-custom-segments-for-mobile-app-testing/

Widerfunnel.com. Prioritize Your Tests with the PIE Framework. https://www.widerfunnel.com/pie-framework/

Sean Flaherty (2016, September 1). The Continuous Innovation Mindset.

Amanda Swan (2014, May 7). 10 Ways to A/B Test Better with Targeting. https://blog.optimizely.com/2014/05/07/10-ways-ab-test-better-with-targeting/