If you’re concerned about security only now that your team is working remotely, you’ve been doing it wrong.

Even as our economy inches toward reopening, the global coronavirus pandemic has forced many businesses to ask employees to continuing to work from home. While system security is always a top priority, a number of clients have only recently shared their security concerns now that their folks are working remotely.

My first response is that they’ve been operating under a false sense of security if their controls are based on the physical location of their employees. Then, once I’m confident I have their undivided attention, I explain how a change in perspective can provide them with system security in our new remote work environment.

When I started working in the IT field, networking was a local concern. Especially for small businesses, you knew who had access to your local systems and could easily control for bad actors. Even in a larger university or multi-campus setting, these controls worked because there was a responsible party who could restrict or revoke system access.

In essence, security was local because all of your assets and users were local; you knew who you could explicitly trust because everyone who wasn’t local wasn’t trustworthy.

Setting Trust Boundaries in Systems

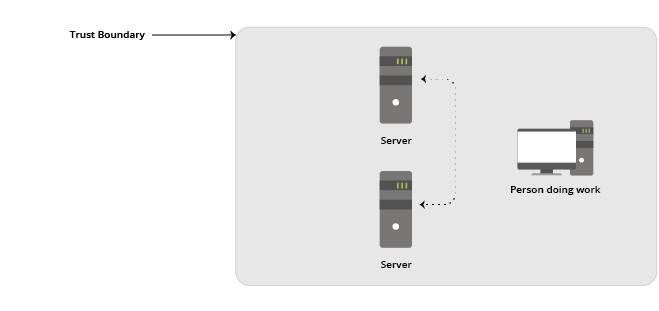

Confidentiality, Integrity, and Availability (CIA) could be maintained by securing everything and everyone within the building or within the known infrastructure. Think about this in terms of a Trust Boundary with users and assets secured together inside the boundary, behind the firewall. Everything else lives outside the boundary created by that device.

The Trust Boundary concept implies that users within it are trusted in a way that allows them special access to systems and services.

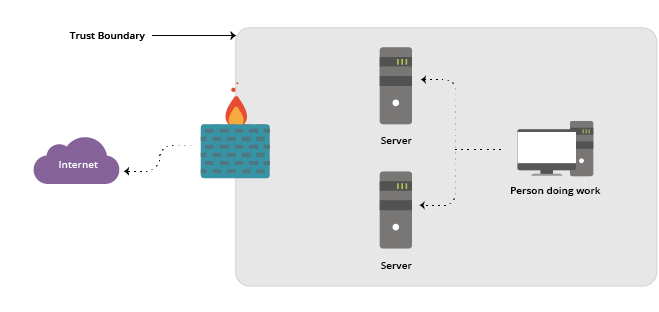

When connecting systems to the internet, the model pretty much continued to work the same way; generally speaking, the model had not changed. Business assets and employees continued to be local, with everything else outside.

The model implicitly tells us that we trust users and systems inside the boundary created by the firewall, and we do not trust users outside the boundary to make connections into our secured assets:

So historically, we “trusted” systems within the firewall and treated systems outside of the firewall as not trustworthy. But economies of scale began to break this model.

Subverting Trust Boundaries in Systems

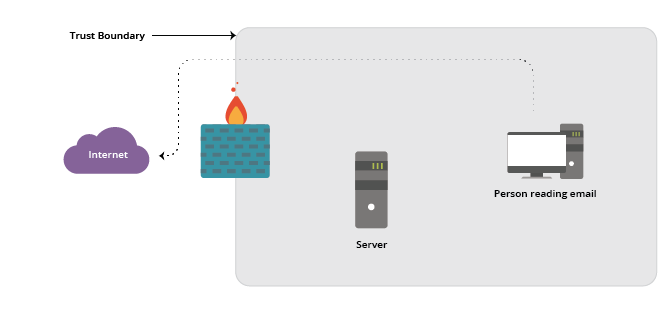

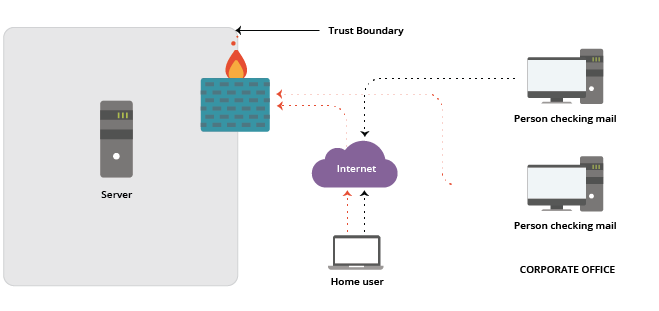

I was certainly among those who suggested that fighting spam and maintaining high availability on a private mail server exposed to the internet was an exercise in futility. Better to leave it to organizations like Google and Microsoft that could devote an appropriate effort to keeping these systems up and protected. But the model changed slightly without our noticing. Suddenly, we have a “trusted” system outside of our Trust Boundary:

What happened here?

We moved a protected asset (mail services) outside of the Trust Boundary without changing the way the local systems were configured or changing the relationship users had with the service.

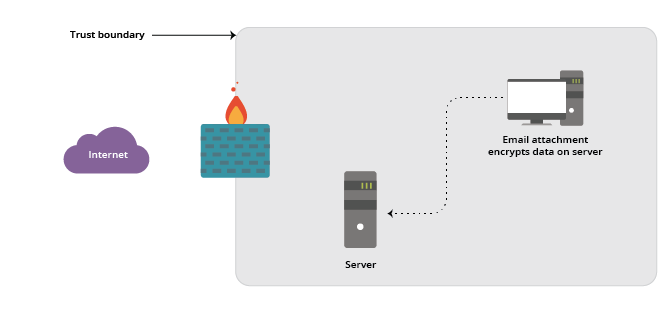

This has led to literally billions of dollars’ worth of time and money lost to email attacks. Consider the effects of viruses like the popular Melissa virus and malware attacks like Ransomware, each of which takes advantage of an internal “trusted” user downloading an email and launching an application that has the trusted user’s access to local systems:

Security Risks Associated with Remote Work

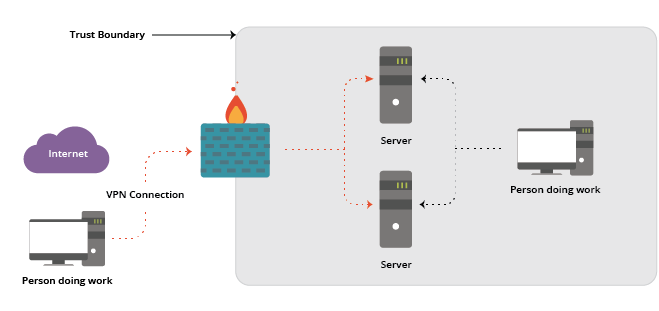

An additional problem we’ve created is improperly extending this model to allow our users to work remotely. The use of VPN extends access to private servers to remote users without changing the trust boundaries:

The problem is that by identifying this person by a username and a password, we can no longer identify them by associating their login event with a physical location. So if and when a remote user’s log-in credentials are stolen, an attacker has full access to trusted resources within our Trust Boundary.

Additionally, we relinquish physical control over the user’s workstation itself – this leaves it open to local or remote attack in a way that was much harder to do when in a controlled environment (i.e., behind the company firewall and within the Trust Boundary).

How do we solve for this problem?

Response to a Changing World

People need to do work. They need to use systems that are hosted in the cloud or on servers in a data center, or work remotely but on servers in the server closet.

Right now, people need to work from home. When the current pandemic draws to a close, either a lot of people will choose to continue working from home or their employer will look with anticipation to the reduction in overhead they will realize by more of their employees working remotely.

This situation of supporting remote workers is unlikely to change, but it’s clear that something has to if we are to ensure adequate system security. The model that we’ve used in the past, that worked so well when everyone was in the office, is unsustainable.

Avoiding False Assumptions Leads to Robust Solutions

Let’s go back in time, to when we started using the internet.

We assumed at the time that we could maintain the existing Trust Boundary and put workarounds in place, like spam filters and stronger passwords and more expensive firewalls. But we learned quickly that more workarounds result in more complexity. This complexity increases the number of points of failure, resulting in a brittle system that is likely to fail spectacularly and repeatedly.

I suggest a more robust solution, starting with a simple first step.

Go back to the beginning with all users looking the same, but with a new twist: all users live outside the Trust Boundary, and no one has special “high trust” access to systems within it:

This new model no longer makes assumptions about trusted users. Rather, it assumes that all users are untrustworthy.

To clarify, the move isn’t to change your current security stance. The new model asks us to assume that everyone is already an untrusted remote/distributed user (even if they’re in your office), and to make sure that there are no exceptions that add complexity to the system.

If you’re not sure that you can trust the actors in your system, what changes does that require? Well, maybe not a lot. It’s likely that some of these items are in place in some form already but aren’t fully developed.

Here’s the top three things I would do to get things “solid”:

- Maintain simplicity by managing all users in the same way.

Not a “to-do” in our top 3 per se but a necessary mindset. In each of the following items, be sure to keep things as simple as possible. Manage everyone the same way, from the mail room to the C-suite. Attackers specifically target executives in companies because they often have more access, are very busy/inattentive, and tend to avoid inconvenient security controls.

- Strongly authenticate all users, everywhere.

Because computers are not very good at telling the difference between Sarah down the hall in Accounting and Karl in Russia, Multi-Factor Authentication is a method that can positively identify a computer user’s credentials by testing at least two factors of identification.

The most common factors are:

- Something you know (typically a username/password)

- Something you have (a single device like a cell phone that is hard to reproduce)

- Something you are (a biometric test, like a fingerprint)

- Somewhere you are (your location, maybe identified by an IP address)

- Something you do (a gesture or physical cadence)

By combining two or more of these authentication factors, your systems can know for sure that your users are who they say they are, which allows you to provide them with levels of expanded access. Once you have a reliable system of authentication in place, you can integrate it with every system, providing what we call “Single Sign On.”

- Tightly control access to everything.

There are two ways to assign permissions. The more convenient method is to assign a user full access to everything and then disable access to those parts of the system you might not want that user to have access to.

While this seems like a sensible approach, it’s prone to failure – you only need to overlook one check box to allow a person more access than they should have. Even the best-intentioned user can make a mistake and delete something. Additionally, doing this for every user in the system compounds the number of mistakes that you can make when assigning permissions.

A more complete approach is to combine an additive approach, called “default deny all” with the use of roles to grant similar permissions to a set of similar users. We refer to this as “role-based access control.” Simply create a group of users who share the same job and assign permissions to them one at a time until they have sufficient access to fulfill their role. And then stop. Work through every department in the company until everyone has the access they need.

- Assume that something is going to go wrong.

Lots of things can go wrong, from system corruption to accidental deletion to ransomware attacks. Let’s just assume that they will. We can turn this from unmitigated disaster to inconvenient nuisance simply by ensuring that all of our systems and assets are backed up.

Start with an inventory of internal systems, and work through finding a way to back them up to an offsite, secure location. Traditional methods have been to back up to an inexpensive medium like tape or removable cartridge. But today you can often use inexpensive online backup systems to accomplish the same task but without the requirement of physically handling backup media.

Once done with internal/local systems, it’s useful to make sure that you back up as many of your online systems as possible. There are even services that can back up online mail/data services like Gmail or Office365 to another cloud provider’s storage facility, ensuring that human failures can be handled.

Finally, no backup system is truly in place without a regular test of that system. Work with your team to do test restores of each of your systems on a regular schedule. This will provide you with the confidence to be sure that you can weather the storm if and when there’s a problem with your data or systems.

Treat all of your users with a basic set of solid security principles and defend your systems with the assumption that they’re at risk no matter where your team is working from. You’ll be more comfortable that your assets are defended and secure, and your team will be able to get their job done with a system that is as simple as possible.

Jonathan Coupal, CISSP, MCSE, is the Chief Technology Officer at ITX Corp., headquartered in Rochester, NY. Jonathan specializes in security, cloud and hybrid hosting architecture, problem solving, and research.